|

Diff-Serv is the

product of an IETF working group that has defined a more scalable

way to apply IP QoS. It has particular relevance to service provider

networks. Diff-Serv minimizes signaling and concentrates on

aggregated flows and per hop behaviour (PHB) applied to a

network-wide set of traffic classes. Arriving flows are

classified according to pre-determined rules, which aggregate many

application flows into a limited and manageable set (perhaps 2 to 8)

of class flows.

Traffic entering

the network domain at the edge router is first classified for

consistent treatment at each transit router inside the network.

Treatment will usually be applied by separating traffic into

different queues according to the class of traffic, so that

high-priority packets can be assigned the appropriate priority level

at an output port.

DiffServ approach

separates the classification and queuing functions. Packets carry

self-evident priority marking in the Type-of-Service byte inside

packet headers. (ToS byte is part of the legacy IP architecture.) IP

Precedence (IPP) defines eight priority levels. DiffServ, its

emerging replacement, reclaims the entire ToS byte to define up to a

total of 256 levels. The priority value is interpreted as an index

into a Per-Hop Behavior (PHB) that defines the way a single network

node should treat a packet marked with this value, so that it will

provide consistent multi-hop service. In many cases, PHBs are

implemented using some of the queuing disciplines mentioned earlier.

This method allows for an efficient index classification that is

considered to be highly scalable and best suited for backbone use.

However, translating PHBs into end-to-end QoS is not a trivial task.

Moreover, inter-domain environments may require a concept known as

“bandwidth brokerage,” which is still in the early research stage.

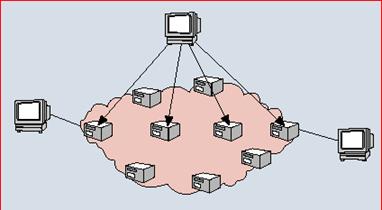

Figure 6 -

End-to-end transport from host S to host D under the DiffServ

architecture

DiffServ outlines

an initial architectural philosophy that serves as a framework for

inter-provider agreements and makes it possible to extend QoS beyond

a single network domain. The DiffServ framework is more scalable

than IntServ because it handles flow aggregates and minimizes

signaling, thus avoiding the complexity of per-flow soft states at

each node. Diff-Serv will likely be applied most commonly in

enterprise backbones and in service provider networks.

There will be

domains where IntServ and DiffServ coexist, so there is a need to

interwork them at boundaries. This interworking will require a set

of rules governing the aggregation of individual flows into class

flows suitable for transport through a Diff-Serv domain. Several

draft interworking schemes have been submitted to the IETF.

To provide QoS

support, a network must somehow allow for controlled unfairness in

the use of its resources. Controlling to a granularity as fine as a

flow of data requires advanced signaling protocols. By recognizing

that most of the data flows generated by different applications can

be ultimately classified into a few general categories (i.e.,

traffic classes), the DiffServ architecture aims at providing simple

and scalable service differentiation. It does this by discriminating

and treating the data flows according to their traffic class, thus

providing a logical separation of the traffic in the different

classes.

In DiffServ,

scalability and flexibility are achieved by following a hierarchical

model for network resource management:

·

Interdomain resource management:

Unidirectional

service levels, and hence traffic contracts, are agreed at each

boundary point between a customer and a provider for the traffic

entering the provider network.

·

Intradomain resource management:

The service provider is solely responsible for the

configuration and provisioning of resources within its domain (i.e.,

the network). Furthermore, service policies are also left to the

provider.

At their

boundaries, service providers build their offered services with a

combination of traffic classes (to provide controlled unfairness),

traffic conditioning (a function that modifies traffic

characteristics to make it conform to a traffic profile and thus

ensure that traffic contracts are respected), and billing (to

control and balance service demand). Provisioning and partitioning

of both boundary and interior resources are the responsibility of

the service provider and, as such, outside the scope of DiffServ.

For example, DiffServ does not impose either the number of traffic

classes or their characteristics on a service provider.

Although traffic

classes are nominally supported by interior routers, DiffServ does

not impose any requirement on interior resources and

functionalities. For example, traffic conditioning (i.e., metering,

marking, shaping, or dropping) in the interior of a network is left

to the discretion of the service providers.

If each packet

conveyed across a service provider's network simply carries in its

header an identification of the traffic class (called a DS codepoint)

to which it belongs, the network can easily provide a different

level of service to each class. It does this by appropriately

treating the corresponding packets, say, by selecting the

appropriate per-hop behavior (PHB) for each packet. In both IPv4 and

IPv6, the traffic class is denoted by use of the DS header field.

It must be noted

that DiffServ is based on local service agreements at

customer/provider boundaries. Therefore, end-to-end services will be

built by concatenating such local agreements at each domain boundary

along the route to the final destination. The concatenation of local

services to provide meaningful end-to-end services is still an open

research issue.

The net result of

the DiffServ approach is that per-flow state is avoided within the

network, since individual flows are aggregated in classes.

The DiffServ

architecture is an elegant way to provide much needed service

discrimination within a commercial network. Customers willing to pay

more will see their applications receive better service than those

paying less. This scheme exhibits an "auto-funding" property:

"popular" traffic classes generate more revenues, which can be used

to increase their provisioning.

A traffic class

is a predefined aggregate of traffic. Compared with the aggregate of

flows described earlier, traffic classes in DiffServ are accessible

without signaling, which means they are readily available to

applications without any setup delay. Consequently, traffic classes

can provide qualitative or relative services to

applications that cannot express their requirements quantitatively.

This conforms to the original design philosophy of the Internet. An

example of qualitative service is "traffic offered at service level

A will be delivered with low latency," while a relative service

could be "traffic offered at service level A will be delivered with

higher probability than traffic offered at service level B."

Quantitative services can also be provided by DiffServ. A

quantitative service might be "90 percent of in-profile traffic

offered at service level C will be delivered."

Since the

provisioning of traffic classes is left to the provider's

discretion, this provisioning can, and in the near future will, be

performed statically and manually. Hence, existing management tools

and protocols can be used to that end. However, this does not rule

out the possibility of more automatic procedures for provisioning.

The only

functionality actually imposed by DiffServ in interior routers is

packet classification. This classification is simplified from that

in RSVP because it is based on a single IP header field containing

the DS codepoint, rather than multiple fields from different

headers. This has the potential of allowing functions performed on

every packet, such as traffic policing or shaping, to be done at the

boundaries of domains, so forwarding is the main operation performed

within the provider network.

Another advantage

of DiffServ is that the classification of the traffic, and the

subsequent selection of a DS codepoint for the packets, need not be

performed in the end systems. Indeed, any router in the stub network

where the host resides, or the ingress router at the boundary

between the stub and provider networks, can be configured to

classify (on a per-flow basis), mark, and shape the traffic from the

hosts. Such routers are the only points where per-flow

classification may occur, which does not pose any problem because

they are at the edge of the Internet, where flow concentration is

low. The potential noninvolvement of end systems, and the use of

existing and widespread management tools and protocols allows swift

and incremental deployment of the DiffServ architecture.

Simultaneously

providing several services with differing qualities within the same

network is a very difficult task. Despite its apparent simplicity,

DiffServ does not make this task any simpler. Instead, in

DiffServ it was decided to keep the operating mode of the network

simple by pushing as much complexity as possible onto network

provisioning and configuration. Network provisioning and

configuration require sophisticated tools and traffic models. So

far, large networks have mainly offered a single type of service

(best-effort service in the Internet, interactive voice in telephone

networks, etc.). The provisioning of networks providing multiple

classes of service at the same time is therefore a rather new area

and quite challenging due to possibly adverse interactions between

different classes of service. The construction of end-to-end

services by concatenating local service agreements is also a

nontrivial issue.

The key to

provisioning is the knowledge of traffic patterns and volumes

traversing each node of the network. This also requires a

good knowledge of network topology and routing. The problem with the

Internet is that provisioning will be performed on a much slower

time scale than the time scales at which traffic dynamics and

network dynamics (e.g., route changes) occur. This problem can be

illustrated with the simplest case of a single service provider

network whose service agreements with customers are static. Although

the amount of traffic entering the domain is known and policed, it

is impossible to guarantee that overloading of resources will be

avoided. This is caused by two factors:

- The entering packets can be bound to any

destination in the Internet, and may thus be routed towards any

border router of the domain (except the one where it entered). In

the worst case, a substantial proportion of the entering packets

might all exit the domain through the same border router

- Route changes can suddenly shift vast

amounts of traffic from one router to another.

Figure 7 - The

trade-off between quality and efficiency.

Therefore, unless

resources are massively over provisioned in both interior and border

routers, traffic and network dynamics can cause momentary violation

of service agreements, especially those relating to quantitative

services. On the other hand, massive over provisioning results in a

very poor statistical multiplexing gain, and is therefore

inefficient and expensive, as illustrated in Figure 7.

To increase

resource usage in the network, service providers can trade

generality and robustness for efficiency. For example, to limit the

amount of expensive resources dedicated to the support of

quantitative services, service providers can limit quantitative

service contracts to apply between any pair of border routers in the

domain. In such a case, the service would apply only to packets

entering the domain at a designated ingress router and leaving the

domain at a designated egress router. This helps solve the first

problem described above at the cost of generality, since only

packets bound for destinations "served" through the egress router

can benefit from the service. To ensure that the egress router is in

the route to any given destination, the interdomain routing entry

for that destination must be statically fixed in the ingress router.

Even for a fixed ingress-egress pair, intradomain routing dynamics

can still occur. This means that the set of internal routers visited

by the packets travelling between the ingress and egress routers can

still suddenly change. However, the "directionality" of the traffic

considered here is such that the number of possible routes is

considerably reduced compared with the general case, and so is the

resulting and necessary over provisioning. A service provider could,

however, reduce to a minimum the over provisioning of quantitative

services offered between pairs of border routers by "pinning" the

intradomain route between those routers. Fixing the egress router

for a given destination and/or pinning internal routes between

border routers nevertheless incurs a loss of robustness. In

multicast, where receivers can join and leave the communication at

any time, the problem of efficient provisioning will be even worse.

Alternatively, a

service provider might wish to use dynamic logical provisioning and

configuration (i.e., sharing of resources between classes) as an

answer to the problems of network and traffic dynamics. However,

depending on the type of service agreement (qualitative, relative,

or quantitative) and the QoS parameters involved in the agreement,

dynamic logical provisioning might require signaling and admission

control.

From the point of

view of a flow, the class bandwidth is not a meaningful parameter.

In fact, bandwidth is a class property shared by all the

flows in the class, and the bandwidth received by an individual flow

depends on the number of competing flows in the class as well as the

fairness of their respective responses to traffic conditions in the

class. Therefore, to receive some quantitative bandwidth guarantees,

a flow must "reserve" its share of bandwidth along the data path,

which involves some form of end-to-end signaling and admission

control (at least among logical entities called bandwidth brokers).

This end-to-end signaling should also track network dynamics (i.e.,

route changes) to enforce the guarantees, which can be very complex.

Furthermore, even qualitative bandwidth agreements require

end-to-end signaling and admission control. This is because even if

one class is guaranteed to have more bandwidth than another, the

number and behavior of flows in the latter class may result in

smaller shares of bandwidth for these flows than for the flows in

the other class. Hence, in this case, end-to-end signaling would

also be required to ensure that in every node along the path, the

bandwidth received by a flow in a high bandwidth class is greater

than the bandwidth received by a flow in a smaller bandwidth class.

On the other

hand, delay and error rates are class properties that apply

to every flow of a class. This is because in every router visited,

all the packets sent in a given class share the queue devoted to

that class. Consequently, as long as each router manages its queues

to maintain a relative relationship between the delay and/or error

rate of different classes, relative service agreements can be

guaranteed without any signaling. However, if quantitative delay or

error rate bounds are required, end-to-end signaling and admission

control are also required.

End-to-end

signaling and admission control would increase the complexity of the

DiffServ architecture. The idea of dynamically negotiable service

agreements has also been suggested as a way of improving resource

usage in the network. Such dynamic service-level agreements would

require complex signaling, since the changes might affect the

agreements a provider has with several neighboring networks. The

time scale on which such dynamic provisioning could occur would be

limited by scalability considerations, which in turn could impede

its usefulness.

It is therefore

believed that in its simplest and most general form, DiffServ can

efficiently provide pure relative service agreements on delay and

error rates among classes. However, unless complex signaling and

admission control are introduced in the DiffServ architecture, or

generality and robustness are sacrificed to some extent, guarantees

on bandwidth as well as quantitative bounds on delay and error rates

cannot be provided. It is should kept in mind that signaling could

only be used by applications which can at least give a quantitative

estimate of their requirements, and reside on hosts that have been

modified to support signaling, thus limiting the immediate usability

of DiffServ.

It should be

noted that from a complexity point of view, a DiffServ scenario with

dynamic provisioning and admission control is very close to an

IntServ scenario with flow aggregation. The difference is that

precise delay and error rate bounds might not be computed with

DiffServ, since the delays and error rates introduced by each router

in the domain may not be available to the bandwidth broker.

Consequently,

although DiffServ will undoubtedly improve support for a number of

applications and is urgently needed in the Internet, it does not

represent the ultimate solution for QoS support for all types of

applications. The suitability of DiffServ for a given application

could also depend on the context in which that application is being

used. If we take the example of Internet telephony, we see that

DiffServ is suitable for providing a cheap solution for internal

calls between remote sites of a company by emulating leased lines

between these sites. However, DiffServ may prove unsuitable for the

support of telephony over the Internet for the general public,

because people do not usually restrict their calls to only a few

destinations.

Figure 8 -

Top-down provisioning of a differentiated services network

The current

direction of the diffserv working group calls for the provisioning

and configuration of the diffserv network to be done in a

top-down manner, as illustrated in Figure 8. A final note is

that Diff-Serv lays a valuable foundation for IP QoS, but it cannot

provide an end-to-end QoS architecture by itself. Diff-Serv is a

class-based framework, and an additional set of requirements must be

addressed to achieve a real implementation with hard guarantees,

including:

- A set of DS field code points in lieu of

standards.

- Quantitative descriptions of class

performance attributes.

- A mechanism for efficiently aggregating many

sources of premium class traffic that can converge at transit

routers.

- A solution to the single-ended service level

agreement (SLA) problem.

- An interworking solution for mapping IP CoS

to ATM QoS.

- Management tools to facilitate deployment

and operation.

|